root@autodl-container-c6d2118f3c-5efc0d52:~/autodl-tmp/ChatGLM-6B/ptuning# bash zds_train_finetune.sh

[2023-04-21 14:51:41,110] [INFO] [multinode_runner.py:65:get_cmd] Running on the following workers: 36.111.128.35,36.139.225.141

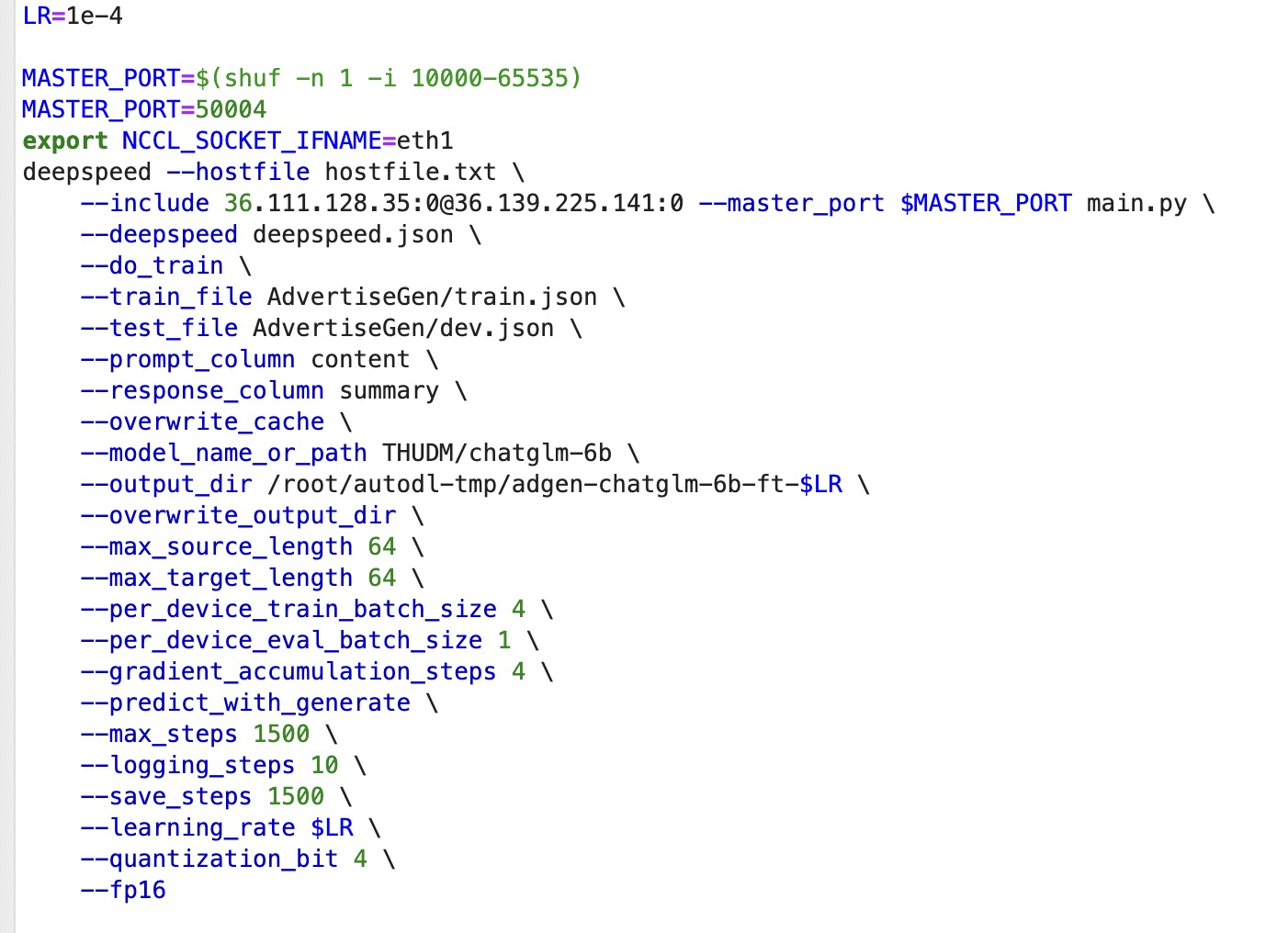

[2023-04-21 14:51:41,111] [INFO] [runner.py:504:main] cmd = pdsh -S -f 1024 -w 36.111.128.35,36.139.225.141 export NCCL_P2P_DISABLE=1; export NCCL_VERSION=2.9.6-1; export NCCL_SOCKET_IFNAME=eth1; export PYTHONPATH=/root/autodl-tmp/ChatGLM-6B/ptuning; cd /root/autodl-tmp/ChatGLM-6B/ptuning; /root/miniconda3/bin/python -u -m deepspeed.launcher.launch --world_info=eyIzNi4xMTEuMTI4LjM1IjogWzBdLCAiMzYuMTM5LjIyNS4xNDEiOiBbMF19 --node_rank=%n --master_addr=36.111.128.35 --master_port=50004 main.py --deepspeed 'deepspeed.json' --do_train --train_file 'AdvertiseGen/train.json' --test_file 'AdvertiseGen/dev.json' --prompt_column 'content' --response_column 'summary' --overwrite_cache --model_name_or_path 'THUDM/chatglm-6b' --output_dir '/root/autodl-tmp/adgen-chatglm-6b-ft-1e-4' --overwrite_output_dir --max_source_length '64' --max_target_length '64' --per_device_train_batch_size '4' --per_device_eval_batch_size '1' --gradient_accumulation_steps '4' --predict_with_generate --max_steps '1500' --logging_steps '10' --save_steps '1500' --learning_rate '1e-4' --quantization_bit '4' --fp16

36.111.128.35: [2023-04-21 14:51:43,278] [INFO] [launch.py:129:main] 0 NCCL_P2P_DISABLE=1

36.111.128.35: [2023-04-21 14:51:43,278] [INFO] [launch.py:129:main] 0 NCCL_VERSION=2.9.6-1

36.111.128.35: [2023-04-21 14:51:43,278] [INFO] [launch.py:129:main] 0 NCCL_SOCKET_IFNAME=eth1

36.111.128.35: [2023-04-21 14:51:43,278] [INFO] [launch.py:136:main] WORLD INFO DICT: {'36.111.128.35': [0], '36.139.225.141': [0]}

36.111.128.35: [2023-04-21 14:51:43,278] [INFO] [launch.py:142:main] nnodes=2, num_local_procs=1, node_rank=0

36.111.128.35: [2023-04-21 14:51:43,278] [INFO] [launch.py:155:main] global_rank_mapping=defaultdict(<class 'list'>, {'36.111.128.35': [0], '36.139.225.141': [1]})

36.111.128.35: [2023-04-21 14:51:43,278] [INFO] [launch.py:156:main] dist_world_size=2

36.111.128.35: [2023-04-21 14:51:43,279] [INFO] [launch.py:158:main] Setting CUDA_VISIBLE_DEVICES=0

36.139.225.141: [2023-04-21 14:51:43,733] [INFO] [launch.py:129:main] 1 NCCL_P2P_DISABLE=1

36.139.225.141: [2023-04-21 14:51:43,734] [INFO] [launch.py:129:main] 1 NCCL_VERSION=2.9.6-1

36.139.225.141: [2023-04-21 14:51:43,734] [INFO] [launch.py:129:main] 1 NCCL_SOCKET_IFNAME=eth1

36.139.225.141: [2023-04-21 14:51:43,734] [INFO] [launch.py:136:main] WORLD INFO DICT: {'36.111.128.35': [0], '36.139.225.141': [0]}

36.139.225.141: [2023-04-21 14:51:43,734] [INFO] [launch.py:142:main] nnodes=2, num_local_procs=1, node_rank=1

36.139.225.141: [2023-04-21 14:51:43,736] [INFO] [launch.py:155:main] global_rank_mapping=defaultdict(<class 'list'>, {'36.111.128.35': [0], '36.139.225.141': [1]})

36.139.225.141: [2023-04-21 14:51:43,736] [INFO] [launch.py:156:main] dist_world_size=2

36.139.225.141: [2023-04-21 14:51:43,736] [INFO] [launch.py:158:main] Setting CUDA_VISIBLE_DEVICES=0

36.111.128.35: [2023-04-21 14:51:46,188] [INFO] [comm.py:633:init_distributed] Initializing TorchBackend in DeepSpeed with backend nccl

36.111.128.35: Traceback (most recent call last):

36.111.128.35: File "main.py", line 431, in

36.111.128.35: main()

36.111.128.35: File "main.py", line 58, in main

36.111.128.35: model_args, data_args, training_args = parser.parse_args_into_dataclasses()

36.111.128.35: File "/root/miniconda3/lib/python3.8/site-packages/transformers/hf_argparser.py", line 332, in parse_args_into_dataclasses

36.111.128.35: obj = dtype(inputs)

36.111.128.35: File "", line 113, in init

36.111.128.35: File "/root/miniconda3/lib/python3.8/site-packages/transformers/training_args.py", line 1227, in post_init__

36.111.128.35: and (self.device.type != "cuda")

36.111.128.35: File "/root/miniconda3/lib/python3.8/site-packages/transformers/training_args.py", line 1659, in device

36.111.128.35: return self._setup_devices

36.111.128.35: File "/root/miniconda3/lib/python3.8/site-packages/transformers/utils/generic.py", line 54, in get

36.111.128.35: cached = self.fget(obj)

36.111.128.35: File "/root/miniconda3/lib/python3.8/site-packages/transformers/training_args.py", line 1594, in _setup_devices

36.111.128.35: deepspeed.init_distributed(timeout=timedelta(seconds=self.ddp_timeout))

36.111.128.35: File "/root/DeepSpeed/deepspeed/comm/comm.py", line 637, in init_distributed

36.111.128.35: cdb = TorchBackend(dist_backend, timeout, init_method)

36.111.128.35: File "/root/DeepSpeed/deepspeed/comm/torch.py", line 30, in init__

36.111.128.35: self.init_process_group(backend, timeout, init_method)

36.111.128.35: File "/root/DeepSpeed/deepspeed/comm/torch.py", line 34, in init_process_group

36.111.128.35: torch.distributed.init_process_group(backend,

36.111.128.35: File "/root/miniconda3/lib/python3.8/site-packages/torch/distributed/distributed_c10d.py", line 576, in init_process_group

36.111.128.35: store, rank, world_size = next(rendezvous_iterator)

36.111.128.35: File "/root/miniconda3/lib/python3.8/site-packages/torch/distributed/rendezvous.py", line 229, in _env_rendezvous_handler

36.111.128.35: store = _create_c10d_store(master_addr, master_port, rank, world_size, timeout)

36.111.128.35: File "/root/miniconda3/lib/python3.8/site-packages/torch/distributed/rendezvous.py", line 157, in _create_c10d_store

36.111.128.35: return TCPStore(

36.111.128.35: RuntimeError: connect() timed out. Original timeout was 1800000 ms.

36.139.225.141: Traceback (most recent call last):

36.139.225.141: File "main.py", line 431, in

36.139.225.141: main()

36.139.225.141: File "main.py", line 58, in main

36.139.225.141: model_args, data_args, training_args = parser.parse_args_into_dataclasses()

36.139.225.141: File "/root/miniconda3/lib/python3.8/site-packages/transformers/hf_argparser.py", line 332, in parse_args_into_dataclasses

36.139.225.141: obj = dtype(inputs)

36.139.225.141: File "", line 113, in init

36.139.225.141: File "/root/miniconda3/lib/python3.8/site-packages/transformers/training_args.py", line 1227, in post_init__

36.139.225.141: and (self.device.type != "cuda")

36.139.225.141: File "/root/miniconda3/lib/python3.8/site-packages/transformers/training_args.py", line 1659, in device

36.139.225.141: return self._setup_devices

36.139.225.141: File "/root/miniconda3/lib/python3.8/site-packages/transformers/utils/generic.py", line 54, in get

36.139.225.141: cached = self.fget(obj)

36.139.225.141: File "/root/miniconda3/lib/python3.8/site-packages/transformers/training_args.py", line 1594, in _setup_devices

36.139.225.141: deepspeed.init_distributed(timeout=timedelta(seconds=self.ddp_timeout))

36.139.225.141: File "/root/DeepSpeed/deepspeed/comm/comm.py", line 637, in init_distributed

36.139.225.141: cdb = TorchBackend(dist_backend, timeout, init_method)

36.139.225.141: File "/root/DeepSpeed/deepspeed/comm/torch.py", line 30, in init__

36.139.225.141: self.init_process_group(backend, timeout, init_method)

36.139.225.141: File "/root/DeepSpeed/deepspeed/comm/torch.py", line 34, in init_process_group

36.139.225.141: torch.distributed.init_process_group(backend,

36.139.225.141: File "/root/miniconda3/lib/python3.8/site-packages/torch/distributed/distributed_c10d.py", line 576, in init_process_group

36.139.225.141: store, rank, world_size = next(rendezvous_iterator)

36.139.225.141: File "/root/miniconda3/lib/python3.8/site-packages/torch/distributed/rendezvous.py", line 229, in _env_rendezvous_handler

36.139.225.141: store = _create_c10d_store(master_addr, master_port, rank, world_size, timeout)

36.139.225.141: File "/root/miniconda3/lib/python3.8/site-packages/torch/distributed/rendezvous.py", line 157, in _create_c10d_store

36.139.225.141: return TCPStore(

36.139.225.141: RuntimeError: connect() timed out. Original timeout was 1800000 ms.

36.111.128.35: [2023-04-21 15:21:47,254] [INFO] [launch.py:286:sigkill_handler] Killing subprocess 864

36.111.128.35: [2023-04-21 15:21:47,255] [ERROR] [launch.py:292:sigkill_handler] ['/root/miniconda3/bin/python', '-u', 'main.py', '--local_rank=0', '--deepspeed', 'deepspeed.json', '--do_train', '--train_file', 'AdvertiseGen/train.json', '--test_file', 'AdvertiseGen/dev.json', '--prompt_column', 'content', '--response_column', 'summary', '--overwrite_cache', '--model_name_or_path', 'THUDM/chatglm-6b', '--output_dir', '/root/autodl-tmp/adgen-chatglm-6b-ft-1e-4', '--overwrite_output_dir', '--max_source_length', '64', '--max_target_length', '64', '--per_device_train_batch_size', '4', '--per_device_eval_batch_size', '1', '--gradient_accumulation_steps', '4', '--predict_with_generate', '--max_steps', '1500', '--logging_steps', '10', '--save_steps', '1500', '--learning_rate', '1e-4', '--quantization_bit', '4', '--fp16'] exits with return code = 1

pdsh@autodl-container-c6d2118f3c-5efc0d52: 36.111.128.35: ssh exited with exit code 1

36.139.225.141: [2023-04-21 15:21:48,304] [INFO] [launch.py:286:sigkill_handler] Killing subprocess 315

36.139.225.141: [2023-04-21 15:21:48,310] [ERROR] [launch.py:292:sigkill_handler] ['/root/miniconda3/bin/python', '-u', 'main.py', '--local_rank=0', '--deepspeed', 'deepspeed.json', '--do_train', '--train_file', 'AdvertiseGen/train.json', '--test_file', 'AdvertiseGen/dev.json', '--prompt_column', 'content', '--response_column', 'summary', '--overwrite_cache', '--model_name_or_path', 'THUDM/chatglm-6b', '--output_dir', '/root/autodl-tmp/adgen-chatglm-6b-ft-1e-4', '--overwrite_output_dir', '--max_source_length', '64', '--max_target_length', '64', '--per_device_train_batch_size', '4', '--per_device_eval_batch_size', '1', '--gradient_accumulation_steps', '4', '--predict_with_generate', '--max_steps', '1500', '--logging_steps', '10', '--save_steps', '1500', '--learning_rate', '1e-4', '--quantization_bit', '4', '--fp16'] exits with return code = 1

pdsh@autodl-container-c6d2118f3c-5efc0d52: 36.139.225.141: ssh exited with exit code 1

root@autodl-container-c6d2118f3c-5efc0d52:~/autodl-tmp/ChatGLM-6B/ptuning#

两个节点,36.111.128.35是主节点。36.139.225.141是从节点,两个节点都配置了互相的免密登陆,且在相同的目录下放置了本仓库的代码,在主节点上执行ds_trainfinetune.sh时报错。

1

Environment

- OS:

- Python:

- Transformers:

- PyTorch:

- CUDA Support (`python -c "import torch; print(torch.cuda.is_available())"`) :